Pulling Data from Google Alert Emails

Do you have Google Alert emails as far back as the beginning of time? This post is for you.

Since June 2017, I’ve had a Google Alert set up to track news on “insulin prices.” I used to pour over these whenever they’d show up in my inbox. It was nice validation that the activism work I and many others were doing was starting to gain traction and move the issue forward. Now, I can’t keep track of all the alert emails that show up in my inbox.

I wanted a way to get a complete listing of all news mentioning insulin prices from my Google Alerts history.

The problem is Google is generally pretty adverse to web scraping and it’s sometimes difficult to get at the underlying data (even your own!) in their systems — especially email. While they provide a nice RSS link to alerts, it only contains the most recent alerts. For alerts going back further in time, the only place those alerts live is in your Gmail inbox.

After poking around the webs and not really seeing a great start to finish solution for what I wanted to do, I came up with the method outlined below. It’s not quite a tutorial but it is a walk through for anyone who has a similar problem and I’ve hosted my code as a gist. The method requires a Gmail account, Google Apps Script, Google Drive, and a few libraries in Python.

For Python 3, I have the Anaconda distribution installed. It comes with all the libraries needed for this walk through — except for Jack Cushman’s pdfquery library.

The Method

Create a new label in Gmail and label any alert emails to download with this label.

Create a Google Apps Script based off of the script here. This script will go through labeled alerts and download them to PDF into folders on Google Drive.

Download folders from Google Drive.

Iterate through folders and PDF files using the pdfquery library to grab alert date, link to article, article title, publication and summary text.

1. Gmail

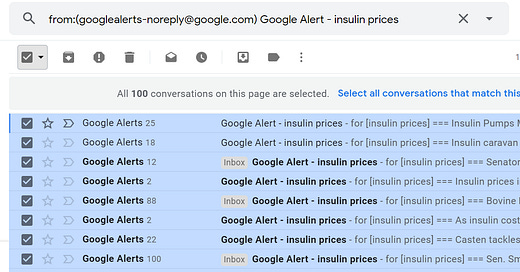

In Gmail, create a search that is specific enough to capture all and only the alert emails you want to pull information from. Click the checkbox to select all conversations and click the link to “Select all conversations that match this search.”

From here, we want to apply a newly created label to these emails.

Note— once the Google Apps Script piece processes and the PDF of the email is saved to Google Drive, all of the “alert” labels will be removed from these emails. It may be prudent to label these as something different when the process is finished to keep track in the future of what has been processed and what hasn’t.

2. Google App Script

Open up Google Apps Script and create a new script. Copy and paste the code from this blog post into the editor.

set gmailLabels variable to the label used to tag the alert emails

set driveFolder variable to what you want to call the folder on Google Drive that will hold the PDFs. Note, because each script has a maximum run time, you may have to run this multiple times to download all the labeled alerts. Each time I ran this, I set this variable to a new name.

Added the “t” variable (the iteration number) to the PDF name at the end so that in each folder, each PDF would have a different name.

Once it is customized to your liking, try running the script.

Warning — Google thinks this script is sketchy because it’s from a third-party, non-verified developer! It works out of the box, which is amazing but you will have to authorize it to connect to your Gmail and Google Drive. You will also have to ignore the warning that pops up, “back away from safety”, and proceed with running the script.

Google Apps Script has a maximum run time for each script. Because I had over 400 alerts I wanted to download, I hit the maximum run time. Because the script automatically un-labels the tag for an email once the alert email is downloaded, all it takes is simply re-running the script to continue the download process. Each time I had to restart, I simply set the driveFolder variable to something new.

3. Google Drive

Once all alerts have been processed through the Google Apps Script, head over to Google Drive to find folders with the PDFs. Right click and choose “Download.” Google Drive will zip these and the download will start.

Choose a folder location on your computer and unzip the downloads. If there’s multiple folders, each file within the folder may only have a unique name in that folder from the Google Apps Script. It’s important to leave the folder structures as they are — Python can handle iterating through multiple files within multiple folders.

4. Python

I’ve hosted my code over on a gist. The base_directory variable should be changed to the file location that hosts all the downloaded folders. The subfolders variable should be a list of all the folder names that contain the Google Alert email PDFs.

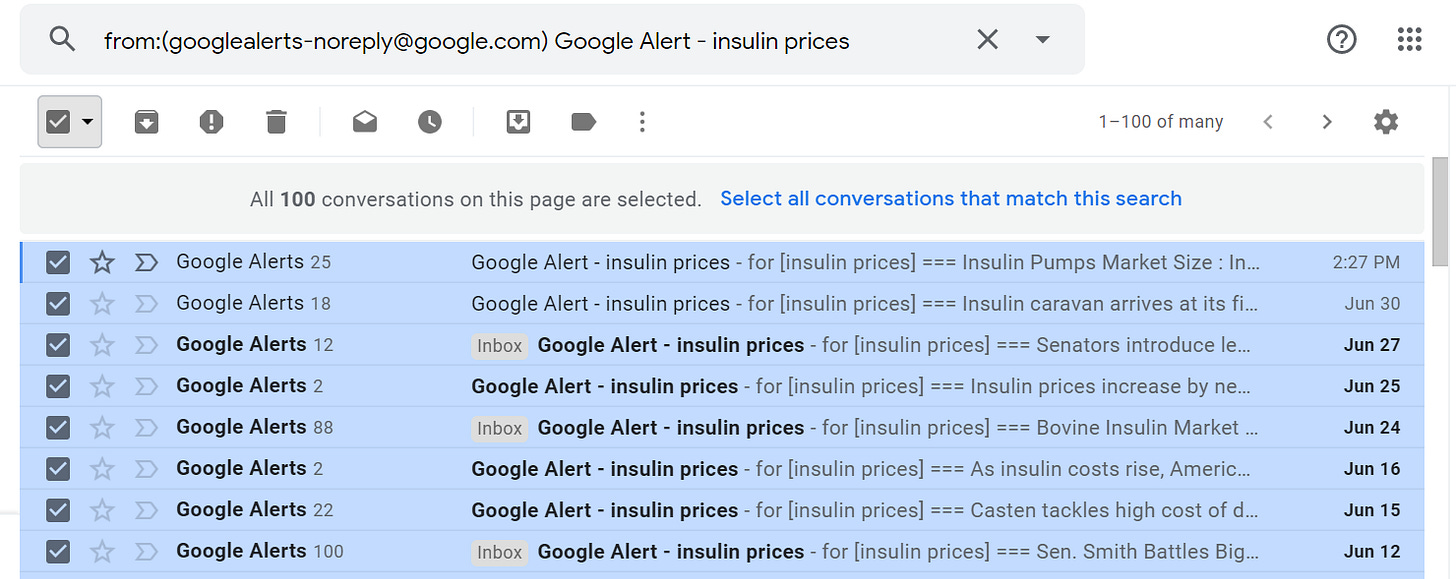

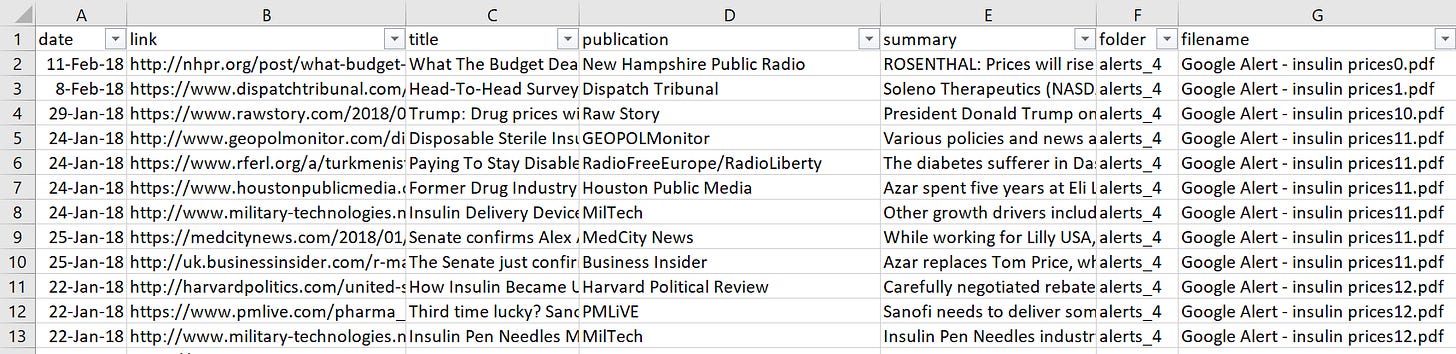

From there, the code works by iterating through each PDF and parsing the PDF using pdfquery. The logic for this is a little wonky — it uses relative and physical anchors from each alert to pull alert date, link to article, title of article, publication and the text summary and outputs a .csv file.

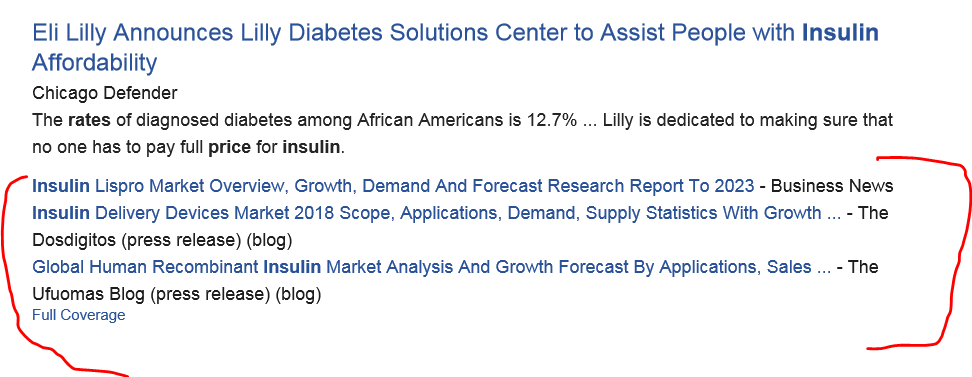

One caveat — the code does not include any links that are added on to another alert as part of the “Full Coverage.”

Overall, I’m pretty thrilled that I got this to work.

The resulting data are as messy as Google Alerts are and it’s going to take some time to get the data in the shape I want it to be in. My next steps are:

Operationalize this so that I can easily run it on a recurring basis to stay on top of the news.

Filter out articles that aren’t relevant to activism work (market analysis, trends, etc) from the data.

Use libraries like Lucas Ou-Yang’s newspaper to see if I can pull any additional information. Or, maybe I will engage in some more intense web scraping.

Use the data to identify gaps in coverage by the media on this issue and as another way to quantify to people who make policy the impact insulin prices have on peoples’ lives.

I’m looking forward to continuing to work with this and hope this guide was helpful for anyone who would like to do something similar!